Gen 6: Event Sourcing - Capturing the History of State Changes

As our e-commerce platform continues to evolve, we face new requirements that challenge our current architecture. Auditors need complete history of critical business entities. Operations teams want to understand exactly how orders reached their current state. Business analysts need historical trends that can't be derived from current state alone.

These needs point to a fundamental limitation in our current approach: we capture only the current state of our domain objects, losing the rich history of how they evolved. This brings us to the sixth generation in our architectural evolution: Event Sourcing.

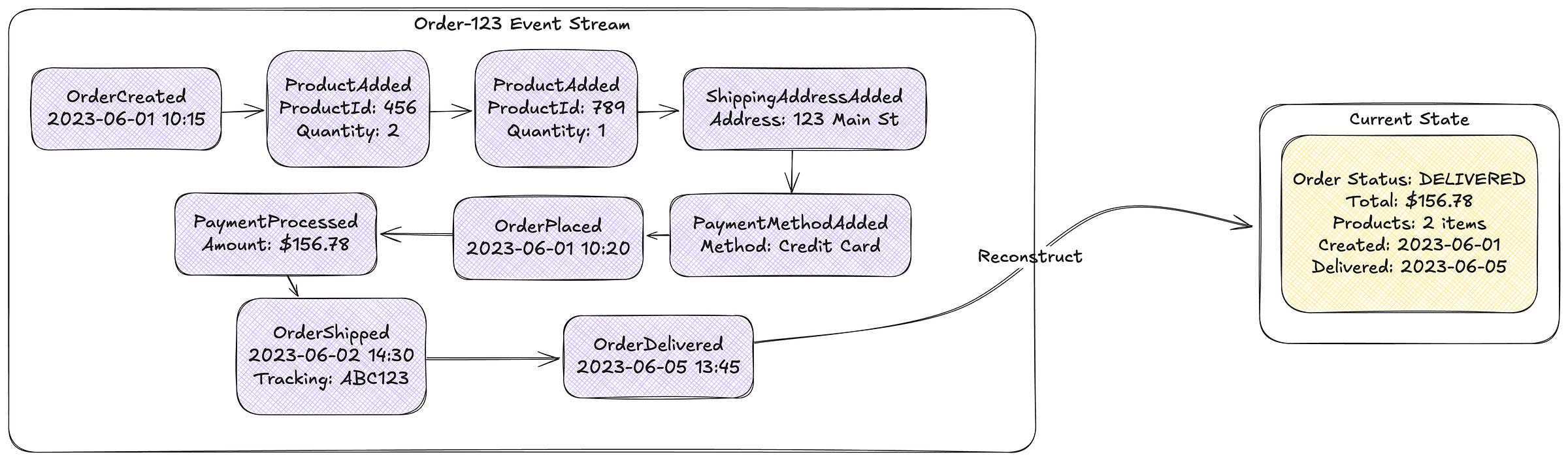

Event Sourcing fundamentally changes how we think about state. Instead of storing the current state of our entities, we store the complete sequence of events that led to that state. The current state becomes a projection derived from applying these events in sequence. This preserves the entire history of our domain objects and enables powerful capabilities that would be difficult or impossible with traditional state-based persistence.

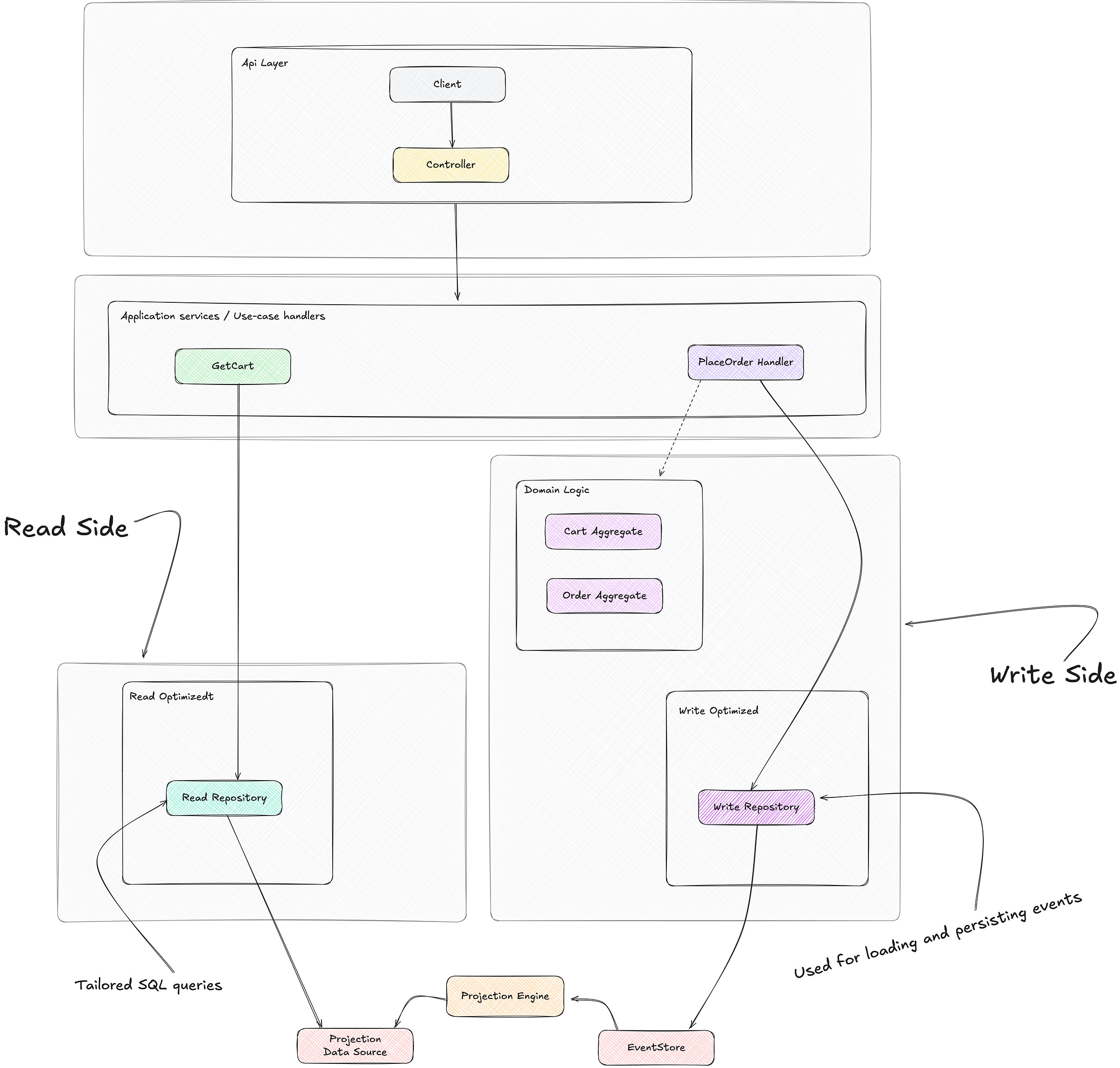

Architectural Pattern Overview

Structure and Components

Event Sourcing builds on the domain model we established with DDD, but changes how we store and reconstruct that model.

Command handlers continue to orchestrate use cases and manage transactions, but now they apply commands to aggregates and persist the resulting events rather than the resulting state.

The event store replaces traditional repositories as the primary persistence mechanism. It stores streams of domain events for each aggregate instance, preserving the complete history of state changes.

Aggregates are reconstituted by replaying their event streams. Instead of loading the current state from a database, we load all events for an aggregate and apply them in sequence to rebuild the aggregate's state. Now, depending on the technical solution you pick there are several optimizations for performance tuning like snapshots.

Domain events become the central element of the architecture. They capture not just what changed but the business reason for the change, creating a meaningful log of all system activity.

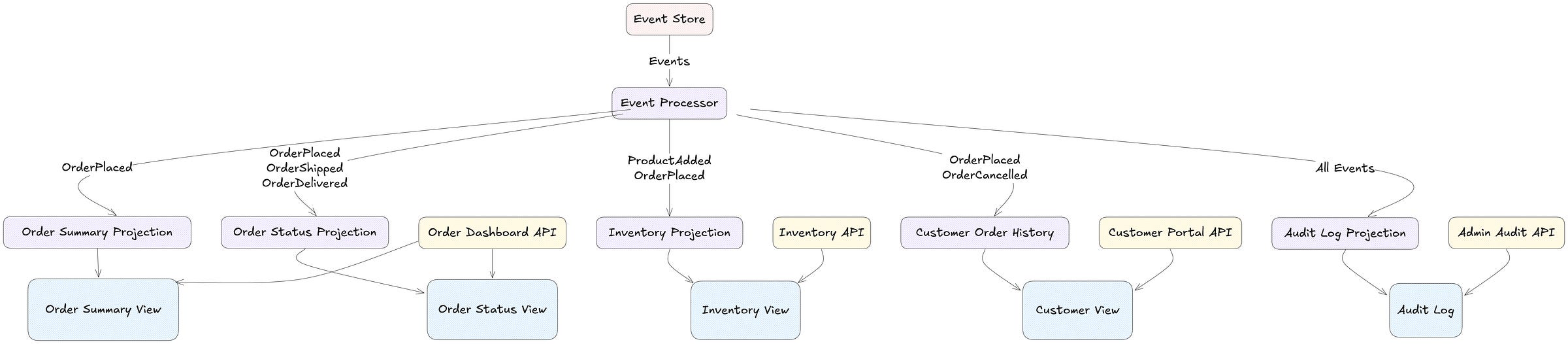

Projections transform event streams into optimized read models for specific query needs. Unlike in traditional CQRS, these projections are explicitly derived from the event store, potentially with different consistency and refresh characteristics.

Event handlers subscribe to domain events and trigger side effects, often updating projections or initiating other processes in response to significant domain events.

Persistence and Reconstitution

The fundamental shift in Event Sourcing is how we persist and load aggregates:

! This is a naive example, just for showing the concept !

// Traditional approach

Order order = orderRepository.findById(orderId); // Loads current state directly

// Event Sourcing approach

List<Event> events = eventStore.getEventsForAggregate(orderId);

Order order = new Order(orderId); // Create empty aggregate

events.forEach(order::apply); // Reconstitute by applying eventsWhen saving changes, instead of persisting the current state, we persist the new events:

// Traditional approach

orderRepository.save(order); // Saves current state

// Event Sourcing approach

List<Event> newEvents = order.getNewEvents();

eventStore.appendEvents(orderId, newEvents);Aggregates themselves need to support both applying events to change state and handling commands to generate events:

class Order {

private OrderState state = OrderState.NEW;

private List<OrderLineItem> items = new ArrayList<>();

private List<Event> newEvents = new ArrayList<>();

// Command handler

public void place(Customer customer) {

if (state != OrderState.NEW) {

throw new InvalidStateException("Order must be in NEW state to place");

}

if (items.isEmpty()) {

throw new ValidationException("Order must have at least one item");

}

// Record event (doesn't change state yet)

OrderPlacedEvent event = new OrderPlacedEvent(this.id, customer.getId(), calculateTotal());

applyNewEvent(event);

}

// Event handler

public void apply(OrderPlacedEvent event) {

this.state = OrderState.PLACED;

// Update other state based on event

}

// Apply and record new event

private void applyNewEvent(Event event) {

newEvents.add(event);

apply(event);

}

// Get new events to persist

public List<Event> getNewEvents() {

return Collections.unmodifiableList(newEvents);

}

// Clear events after persistence

public void clearNewEvents() {

newEvents.clear();

}

}Projections and Read Models

Projections transform event streams into optimized read models:

class OrderSummaryProjection {

public void handle(OrderPlacedEvent event) {

OrderSummary summary = new OrderSummary(

event.getOrderId(),

event.getCustomerId(),

event.getOrderDate(),

event.getTotal()

);

orderSummaryRepository.save(summary);

}

public void handle(OrderShippedEvent event) {

OrderSummary summary = orderSummaryRepository.findById(event.getOrderId());

summary.setStatus("SHIPPED");

summary.setShippingDate(event.getShippingDate());

orderSummaryRepository.save(summary);

}

}These projections can be updated synchronously or asynchronously, depending on consistency requirements, and can create multiple different views of the same underlying event data.

Complexity Management

Where Complexity Lives

Event Sourcing redistributes complexity in several ways:

Temporal complexity shifts from being implicit to explicit. The full history of state changes is a first-class concept in the system, not something reconstructed from logs or audit tables.

Projection complexity increases, as different query needs often require different projections of the event data. Instead of a single canonical representation, we maintain multiple derived views optimized for specific use cases.

Versioning complexity emerges when event schemas evolve over time. As our understanding of the domain grows, we need strategies for handling older event versions alongside newer ones.

Operational complexity increases with the need to manage event streams, projections, and their consistency. The system becomes more distributed, with eventual consistency between write and read sides.

How the Pattern Distributes Complexity

Event Sourcing provides powerful ways to manage complexity:

Historical analysis becomes straightforward since the complete event history is available. Auditing, debugging, and business intelligence all benefit from having the full sequence of state changes readily accessible.

Temporal queries become possible, allowing us to reconstruct the state of any aggregate at any point in time. This enables powerful capabilities for analysis, debugging, and compliance.

Event-driven integration is simplified since domain events are already a core part of the architecture. Events can be naturally published to other systems or services without additional infrastructure.

Debugging is enhanced by the ability to replay events and see exactly how the system reached its current state. When issues occur, the complete history provides invaluable context for understanding what went wrong.

The Three Dimensions Analysis

Coordination

Coordination in Event Sourcing shifts toward event-based choreography. While command handlers still provide some orchestration for immediate operations, much of the system coordination happens through event handlers responding to domain events.

The event store becomes a crucial coordination point, ensuring events are stored in order and providing the foundation for eventual consistency across the system. This creates a more distributed coordination model compared to traditional transaction-based approaches.

Long-running processes and sagas emerge as natural patterns for coordinating complex workflows. These processes track their state through events and can handle business processes that span multiple interactions or days.

Communication

Communication in Event Sourcing becomes predominantly event-based. Domain events serve as the primary communication mechanism, carrying information about significant state changes to interested consumers.

Temporal decoupling increases as more communication happens asynchronously through events. This creates more resilient systems but requires careful design to manage eventual consistency and out-of-order message processing.

Intent-based communication becomes more prominent, with events capturing not just what changed but why it changed. This makes communication more meaningful and useful for both technical and business purposes.

Consistency

Consistency in Event Sourcing embraces eventual consistency as a fundamental property. While command processing can still be immediately consistent, the propagation of events to projections and other event handlers typically happens with eventual consistency.

Causal consistency becomes an important consideration, ensuring that events are processed in an order that preserves cause-and-effect relationships. This is especially important for event handlers that depend on the effects of previous events.

Temporal consistency extends the consistency model to include historical states. The system can provide a consistent view not just of the current state but of any past state, creating powerful capabilities for analysis and auditing.

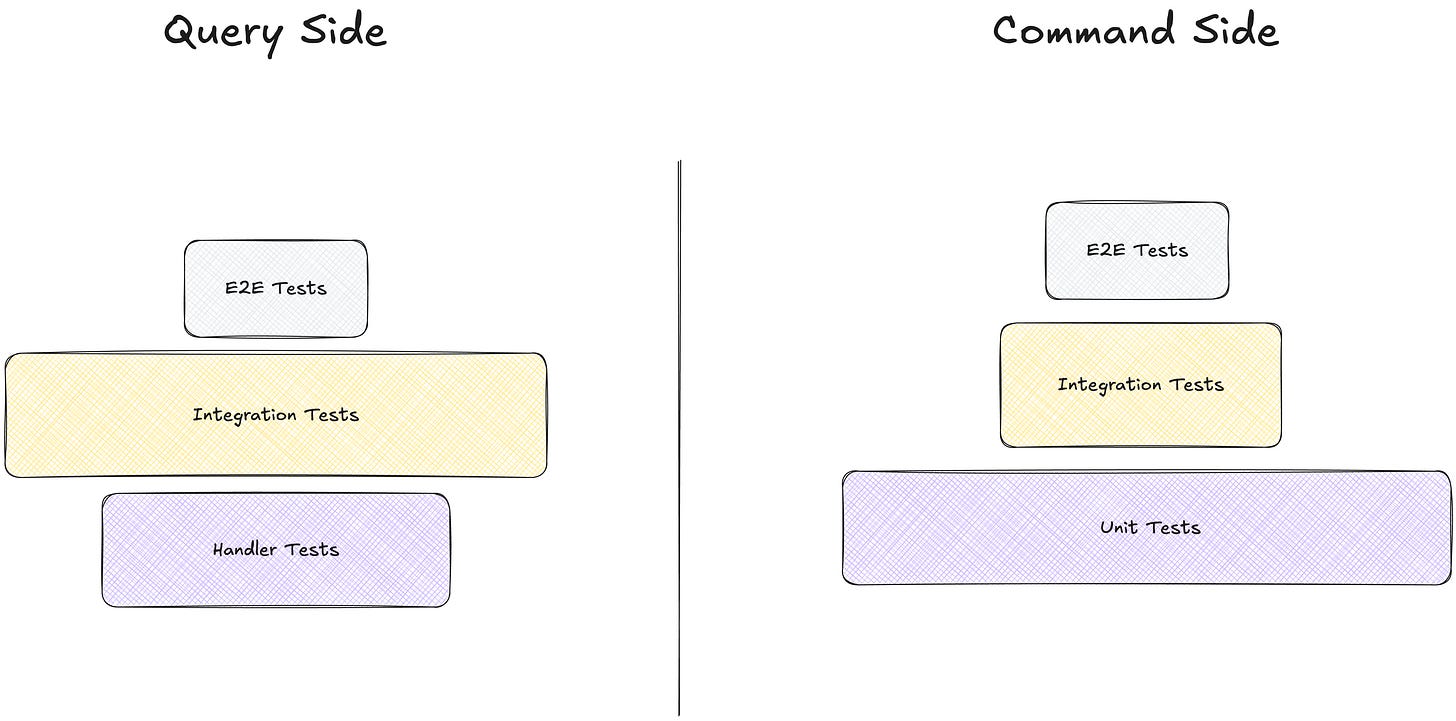

Testing Strategy

Testing Approach for Event Sourcing

Event Sourcing enables a particularly effective testing approach centered around events.

Command tests verify that commands generate the correct events when applied to aggregates in specific states. These tests focus on the business logic that determines how commands translate into events.

Event application tests verify that events properly update aggregate state when applied. These tests ensure that reconstructing aggregates from events produces the correct state.

Projection tests verify that projections correctly transform events into read models. These tests ensure that query capabilities remain accurate as the system evolves.

Event sequence tests verify that specific sequences of events lead to the expected aggregate state. These tests often use a given-when-then structure to describe the initial events, the command being tested, and the resulting events.

Testing Event-Sourced Aggregates

Event Sourcing enables a particularly effective testing approach centered around events. One of the most powerful ways to test event-sourced aggregates follows this pattern:

// Given - a sequence of events that represents the aggregate's history

var pastEvents = [

new OrderCreatedEvent("order-123", "customer-456", "2023-06-01T10:15:00Z"),

new ProductAddedEvent("order-123", "product-789", 2, 25.99),

new ProductAddedEvent("order-123", "product-101", 1, 49.99),

new ShippingAddressAddedEvent("order-123", "123 Main St", "Anytown", "CA", "12345")

];

// When - a new command is executed on the aggregate

var order = new Order(); // Create empty aggregate

pastEvents.forEach(event => order.apply(event)); // Reconstitute from history

var command = new PlaceOrderCommand("order-123");

var newEvents = order.handle(command); // Execute the command

// Then - verify the expected domain events were produced

expect(newEvents.length).toBe(1);

expect(newEvents[0]).toBeInstanceOf(OrderPlacedEvent);

expect(newEvents[0].orderId).toBe("order-123");

expect(newEvents[0].totalAmount).toBe(101.97);This testing pattern directly mirrors how commands are processed in the production system:

The aggregate is reconstituted from its event history

A command is executed against the aggregate

New events are generated based on the command and current state

The test focuses on the business logic by verifying that the correct events are produced in response to the command, given the aggregate's current state. This approach is incredibly powerful because:

It tests the actual decision-making logic of your aggregates

It verifies that your aggregates maintain consistency through event replay

It documents the expected behavior in terms of business events

It's resistant to implementation changes as long as the business behavior remains the same

You can create a comprehensive suite of tests covering different scenarios:

describe("Order aggregate", () => {

test("can place a valid order", () => {

// Given events establishing a valid order...

// When PlaceOrderCommand is handled...

// Then OrderPlacedEvent should be produced...

});

test("cannot place an order without items", () => {

// Given only OrderCreatedEvent but no items...

// When PlaceOrderCommand is handled...

// Then OrderValidationFailedEvent should be produced...

});

test("cannot place an order without shipping address", () => {

// Given events for creation and items but no address...

// When PlaceOrderCommand is handled...

// Then OrderValidationFailedEvent should be produced...

});

test("cannot place an already placed order", () => {

// Given events including OrderPlacedEvent...

// When PlaceOrderCommand is handled again...

// Then CommandRejectedEvent should be produced...

});

});This style of testing is particularly effective for event-sourced systems because it:

Tests behavior rather than implementation details

Documents the business rules in terms of events and commands

Provides a clear structure for test scenarios

Makes it easy to set up complex aggregate states for testing edge cases

When to Consider Event Sourcing

Event Sourcing shines in domains where:

Historical data has significant business value

Audit requirements demand complete history

Business processes span multiple interactions or days

Debugging and analysis benefit from temporal queries

Integration scenarios leverage domain events

It may not be suitable for:

Simple CRUD applications with minimal business logic

Systems with limited historical or audit requirements

High-throughput scenarios with minimal business events

Projects with tight timelines and limited complexity

NFR Analysis

Non-Functional Requirement Alignment

Auditability: ⭐⭐⭐⭐⭐ (Complete history of all state changes)

Flexibility: ⭐⭐⭐⭐⭐ (Multiple projections can serve diverse query needs)

Reliability: ⭐⭐⭐⭐ (History enables better debugging and recovery)

Testability: ⭐⭐⭐⭐⭐ (Event-based testing enables precise verification)

Performance: ⭐⭐⭐ (Can be challenging for high-volume event streams)

Complexity: ⭐⭐ (Increases overall architectural complexity)

Architect's Alert 🚨

Event Sourcing introduces significant architectural complexity. Apply it selectively to parts of your domain where the benefits justify this complexity—typically aggregates with important history, complex business processes, or strict audit requirements.

Be particularly careful with event schema evolution. Since events are immutable and represent the historical record, changes to event schemas need strategy for handling older events—either through versioning, transformation, or upcasting.

Conclusion

Event Sourcing represents a significant leap in our architectural evolution, fundamentally changing how we think about state and history. By capturing the complete sequence of events that led to the current state, we gain powerful capabilities for auditing, analysis, debugging, and integration.

Our e-commerce platform now preserves the rich history of orders, products, customers, and other key domain concepts. This enables detailed auditing of order processing, historical analysis of product pricing strategies, and sophisticated business intelligence on customer behavior over time.

Questions for Reflection

How would preserving the complete history of state changes benefit your domain? Consider which aggregates in your system have the most valuable history.

Where in your system would the ability to reconstruct past states provide the most business value? Audit requirements, debugging scenarios, and business intelligence often benefit most from temporal queries.

What challenges might you face in adopting Event Sourcing for your existing domain model? Event schema evolution and projection management often present the biggest hurdles.